Code Critique: Rich, Immediate Critiques of Coding Antipatterns

Code Critique: Rich, Immediate Critiques of Coding Antipatterns

WebTA is an automated code critique system designed to support students learning programming in introductory and discipline-specific computing courses. Unlike traditional autograders, WebTA provides students with actionable feedback on the structure, behavior, style, and design of their code while they are actively engaged in development. The system draws upon the principles of cognitive apprenticeship to help students recognize and address novice antipatterns—common mistakes that are rarely made by experts but frequently encountered in beginner submissions.

Originally developed to support Java programming courses at Michigan Technological University, WebTA has since been extended to handle multiple programming languages, including MATLAB, Python, and C. Its primary aim is to simulate the formative critique that instructors offer in person, but at scale, using a layered architecture that integrates seamlessly with learning management systems like Canvas. By identifying problematic patterns and suggesting improvements, WebTA fosters student reflection, iterative refinement, and more effective learning outcomes.

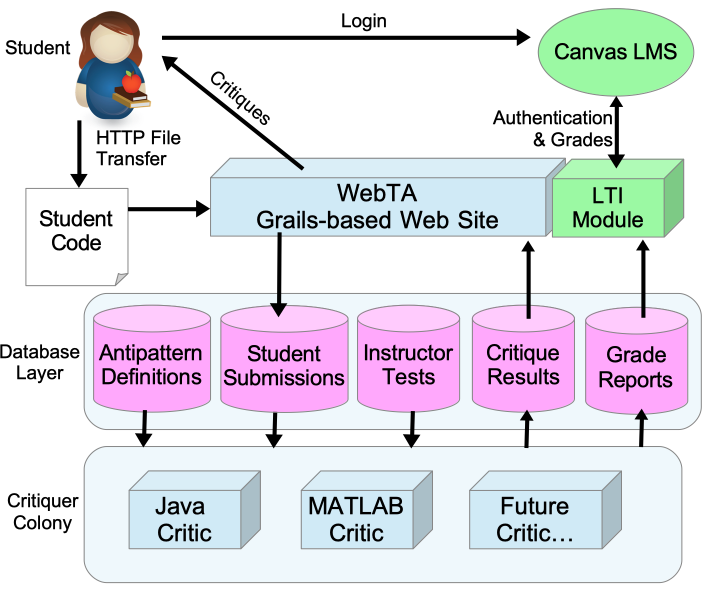

WebTA is built on a modular architecture that facilitates code critique by integrating tightly with Learning Management Systems (LMS) such as Canvas through Learning Tools Interoperability (LTI). Originally a monolithic Java and Grails application, WebTA evolved into a federated architecture where the frontend and backend systems communicate through a shared database. This modularity supports extensibility to multiple languages, enabling the addition of language-specific Code Critics such as the Java Critic and MATLAB Critic.

The architecture consists of:

- LTI Module & Web Frontend: Handles student authentication, assignment setup, and submission through Canvas.

- Critiquer Colony: A backend composed of separate modules for each language-specific critic (e.g., Java Critic, MATLAB Critic). Each critic performs compilation, testing, static analysis, and style checking.

- Database: Acts as the message bus and persistent store. Submission metadata, critique configurations, and feedback messages are all stored and accessed through structured tables.

WebTA supports fine-grained instructor configuration through an LMS assignment dashboard. Each assignment specifies a set of processes to be executed for every student submission. These may include:

- Compilation and syntax validation

- Behavioral testing using unit tests

- Static code analysis for design antipatterns

- Style critique for adherence to naming and formatting conventions

The instructor selects the appropriate critiquer module (e.g., Java or MATLAB) per assignment, configuring language-specific tests and expectations. These configurations are stored in the database within a Config table associated with assignment metadata. For instance, the Config object records fields such as total points, target language, and processes (compile, test, slice, trace, check style).

Students interact with WebTA through a Canvas assignment. Upon submission, the following operational flow is triggered:

- The LTI connection generates a session ID and stores student/course metadata in the database.

- Submitted files are logged into the

Submissiontable along with timestamps. - Each process (compile, test, analyze) is performed sequentially. Outcomes are stored in the

Processtable. - Feedback is generated based on the detection of antipatterns, runtime errors, or style violations. These critiques are recorded in the

Critiquetable, keyed by submission ID and line/column location in code.

This model allows for detailed traceability: each critique is linked to its antecedent event (e.g., a failed test or AST match), making the feedback explainable and reproducible.

Students benefit from immediate, actionable feedback while instructors gain access to analytic data about submission patterns, common errors, and student progress over time.

WebTA: Automated Code Critique Demo: Experience using our WebTA tool to receive automated feedback on source code.

Launch Demo

WebTA: Automated Code Critique Demo: Experience using our WebTA tool to receive automated feedback on source code.

Launch Demo

This work was partly funded by the National Science Foundation awards #2142309 and #1504860.

This work was partly funded by the National Science Foundation awards #2142309 and #1504860.